AI ACT series #2 general purpose ai models

15 mai 2024

The European Artificial Intelligence Regulation (“AI Act”) contains an entire chapter devoted to general-purpose AI (“GPAI”) models. These models have been developed in the last years and are already part of our day-to-day, and that’s only the beginning. The aim here is to provide a first overview of the new upcoming obligations applicable GPAI model providers.

Définition and scope

- Definition. A GPAI model is an AI model “that displays significant generality and is capable of competently performing a wide range of distinct tasks regardless of the way the model is placed on the market and that can be integrated into a variety of downstream systems or applications”. Examples of GPAI models include GPT-4, Mistral Large or Midjourney 6.

- Scope. Rules listed under Chapter 5 of the AI Act, dedicated to GPAI models, apply to providers of GPAI models, once they are put on the market. Details of such obligations are provided below.

- Exceptions. These obligations (i) do not apply to GPAI models used solely for research, development, or prototyping activities, and (ii) only partly apply to providers of AI models released under a free and open license.

- Systemic risk. A specific regime is established for GPAI models with systemic risk – high impact capabilities of a GPAI model with significant impact on the EU market. The providers of such models are subject to additional requirements, such as conducting thorough model evaluation, including adversarial testing, assessing and mitigating possible systemic risks, ensuring an adequate level of cybersecurity protection and keeping track of relevant information about serious incidents and possible corrective measures.

GPAI and IP rights

- GPAI models are developed and trained using large datasets, including vast amounts of texts, images, videos. Such content may be protected by copyright and related rights and, subject to the hypothesis that training techniques imply use of copyrighted content, such use requires the authorization of each right holder, unless relevant copyright exceptions and limitations apply.

- The Text and Data Mining (TDM) exception is stipulated under articles 3 and 4 of EU Directive 2019/790. This exception allows all entities to carry out TDM activities on copyrighted works and protected databases without the need for individual authorization from right holders. For this exception to be applicable outside of scientific research, two cumulative conditions are required: (i) the works should be lawfully accessible, and (ii) the right holders should not have “opt-out”.

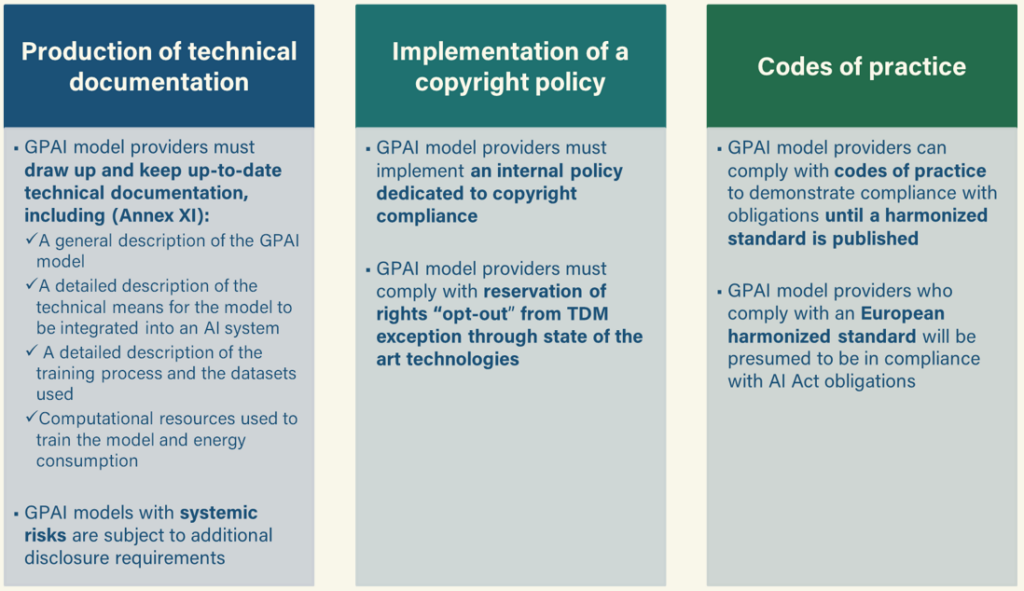

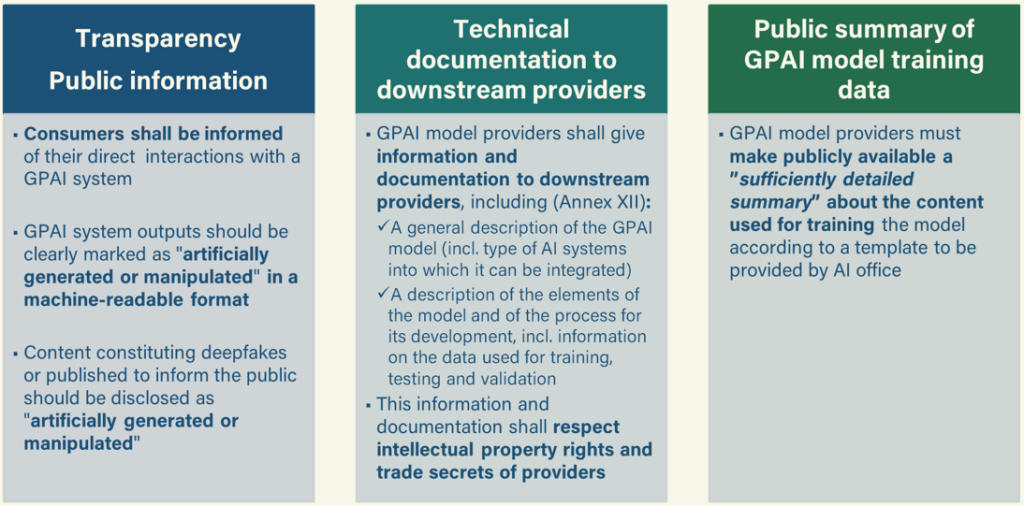

- The AI Act further clarifies that the TDM exception is applicable to the training of GPAI models on copyrighted datasets, and that the conditions of such exception shall be respected by providers of GPAI models. Particularly, two obligations apply: (i) put in place a copyright policy and (ii) make available a “sufficiently detailed summary of the content used for training the GPAI model (…) While taking into due account the need to protect trade secrets and confidential business information”.

Internal compliance

GPAI Model providers obligations

External compliance

Next step

- GPAI model providers will have to comply with these obligations within 12 months from the entry into force of the AI Act.

- GPAI model providers shall begin by conducting a gap analysis between the AI Act requirements and company’s current practices, and start drawing up internal documentation as well as external transparency information.

- Regarding the specific issue of training datasets and copyright, the French Minister of Culture has entrusted the Conseil supérieur de la propriété littéraire et artistique (High-level advisory body on intellectual property) with two new missions:

- the first on the exact content of the ”sufficiently detailed summary” of the data used for training GPAI models that should be made publicly available by GPAI model providers. The conclusions of this mission will be presented by the end of 2024;

- the second on the remuneration of cultural content used by AI systems, in particular on the economic and legal issues underlying access to data protected by literary and artistic property rights when used by AI systems. The conclusions of this mission will be presented in 2025.

Written by Julie Carel, Inès Lalet